Generating Random Greatness

How can teams look to build with AI in an uncertain world

AI-assisted coding and vibe coding is a popular focus for how AI can make a team better. These tools democratize coding by allowing anyone who can describe a product to create it. The better you are at describing, the better the tool will be able to create your product. The success of tools like Lovable.dev and Bolt.new highlight just how much demand there is for this capability.

I’ve felt it as recent advancements to AI models made it easier than ever to rely on AI Agents to build on our behalf. This shift has made this means of building a possibility. Where before simple coded solutions would be often inaccurate, now we’re getting beautiful complex interfaces.

However, that reality is largely a solo endeavor and the tools and practices used are individualized. Due to this being an emerging field, those who code with AI naturally use their own chosen tools, models, techniques, and workflows. This is a reflection of a new ability to work with users in a way not previously possible. AI-powered software can now adapt to you and work with how you see the world.

In a world where everyone is running their AI agents their own way and seeing results they like, can we find a way to build teams that use these tools? Should we try?

Faith in Workflows

We have relationships with the models that run the software we use in our daily workflow. These AIs talk to us where we are at, so it’s not just a tool anymore. It’s now a colleague or a friend that helps us when we’re frustrated and trying to get something to work. We’ve faced challenges and these AIs have established a bond of sorts through their help.

I have a better relationship with GPT-4 than I did with o3 or GPT-4o. I find 4 less conversational but more accurate. That focus on concise accuracy was a benefit to me as I leveraged AI in my products. I want it to focus clearly on a single goal. These values shaped what I cared about and how I evaluated which model I would pick.

Those values and our past experience build personal relationships, forming our perspective when we try to think objectively about how AI tools work. A familiarity bias toward workflows we understand have worked well becomes evident.

You’re willing to deal with bad results from that tool, being familiar with how it works. You know from past experience that this model is capable of giving you something great. Familiarity feels like reliability despite them being different things.

When first trying out a new AI tool, each failure is critical. We don’t know if this tool will be capable of coming up with good results and how long we’re willing to wait to find out is determined by our expectations with other tools. Our brain tells us, “Wouldn’t it just be better to go back to a tool you know well that already seems to understand what you want?”

When we start to bring teams of smart AI builders together, it’s notable that not everyone wants to use the same tools. They don’t have the same relationships and won’t believe in experiences they haven’t had. Without a significant shift in capability, it’s hard to dislodge their faith and practices.

Randomness at Play

Complicating this is something less personal and more mechanical: randomness. If you ask for the same thing twice, you won’t necessarily get the same output. One run nails your brief; the next runs down a side path and gets attached to it. It’s not malice or incompetence; it’s just how the system functions.

When OpenAI announced their latest GPT-5 model, they highlighted a number of impressive web apps that were built on the spot with their latest model. Sometimes, demos included several different responses to the same prompt and the researcher, Yan, noted:

One thing to note, GPT-5, just like many of our other models, has a lot of diversity in its answers. What I like doing, especially when you do this type of vibe coding, is to take this message and ask it multiple times through GPT-5; then you can decide which ones you prefer.

Model providers, like OpenAI, work hard to try to build the best model they can. Running multiple times gives you a chance to see them in their best light. However, it also highlights that sometimes you might not be happy with what you get.

So now when teams are working together to determine which tools they’ll want to use, they may have luck working against them. A lead’s early evaluations may show off poor results on a model everyone else on the team loves to use daily. Is it how they used it for their workflow or is it just an unlucky generation? That’s a hard question to answer.

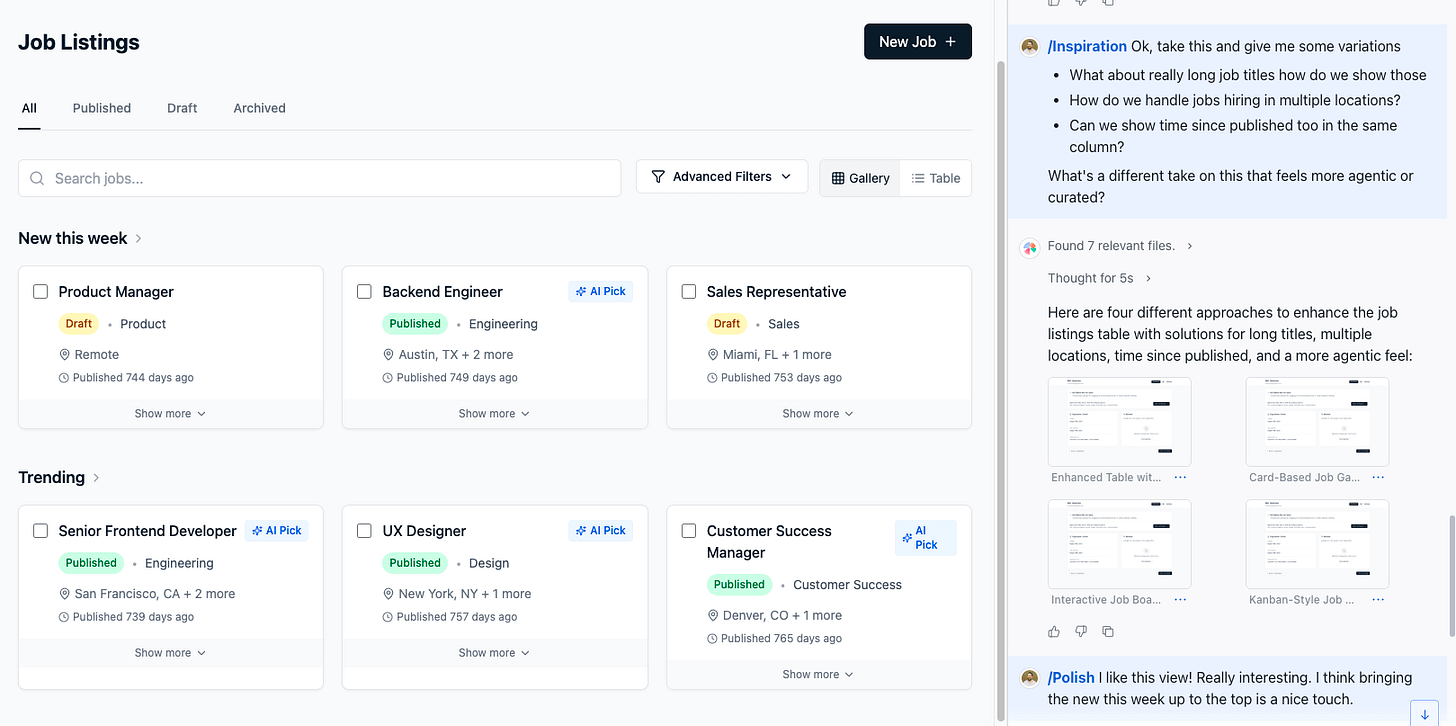

Recently, I worked through building a new UI for a project and decided to test out Magic Patterns, another AI coding platform that’s focused around web design patterns. As I worked to build out a new webpage, I noticed they had a Brainstorm mode. This mode launches four different generations of your prompt to be output as four different examples. As a result, you get a bit of variety as well as a better chance at getting a really great result out of one of the attempts.

Image generation tools have been doing this for a while. We know that those tools sometimes generate bad stuff, but because we always generate 4 at a time (or in ChatGPT’s case, an AI picks the best of 4 behind the scenes), we always get at least one decent image. Otherwise, we know our prompt might need to be changed to something that produces a better output in the model.

This works great with images we can see right away, but it doesn’t work as well with long segments of text. It’s hard to read four different code bases and see which one is the best. However, we can see the output as a website or application and perhaps that’s enough to start to understand what these tools we’re coding with are actually good at.

Emergent Tech Means No Standards

As teams look to adopt major new technology, the term best practices gets thrown around frequently. Leaders want assurance that whatever effort is put into the practice is going to give them a positive outcome. Otherwise, a technology is just seen as a toy that really doesn’t serve them much purpose.

AI coding has been seen as an interesting toy, and it initially deserved that description. Attempts to get AI to meaningfully contribute to coding environments have been plagued by unremarkable code, fabricated API integrations, and misaligned feature development. These early impressions have left their mark and even now many developers hold the opinion that AI coding is still a distraction and can’t be capable of doing anything real.

Looking at the success of Claude Code’s ability to produce meaningful output and the adoption of other development tools, that belief is starting to weaken. It’s hard to ignore that something significant is happening. But how are these same developers going to communicate to their leaders what they need to do about these developments and where should they invest?

Large companies offer answers to these questions. For example, Microsoft is enthusiastic to sell you on a suite of AI tools for Copilot that bring AI into your business. These large companies are keen to become the new standard choice for AI and establish common ways of working for your team. As teams look for this, there’s always someone there eager to sell them a standard.

However, AI is currently an emergent technology. As I’ve mentioned previously, AI solutions that were built just months ago are becoming obsolete as new capabilities become commonplace and models become more available. Because outputs vary and standards lag, emergent teams face a moving target.

Teams building with AI need to assume that models will get better, capabilities will change, and the ways they’re currently engineering with AI are temporary. This is a challenging position for developers and leaders because it’s outside the technical norm to communicate how the team is investing in AI.

Building in the In-Between

Now, teams that look to work together have many interesting tools available to them. It takes a bit of curiosity and a bit of creativity to leverage the tools into workflows that are beneficial—but perhaps more importantly, it takes something we’re not always comfortable with: uncertainty.

The truth is, we’re in a liminal space with AI-powered development. The tools that seem indispensable today might be obsolete in three months. The workflows we painstakingly establish could become irrelevant with the next model release. And the standards we try to impose might actually limit us from discovering what’s truly possible.

This doesn’t mean teams should abandon all structure—disorder isn’t the answer either. Instead, teams need to cultivate what might feel like contradictory qualities: being structured enough to collaborate effectively while remaining fluid enough to adapt when everything shifts beneath their feet. It means holding our tools lightly, our processes flexibly, and our assumptions tentatively.

The most successful teams will be those that stay curious about what’s emerging, rather than defensive about what’s familiar. They’ll create space for experimentation alongside execution. They’ll document what works today while staying alert to what might work better tomorrow. Most importantly, they’ll recognize that the discomfort of not having established best practices is actually a feature, not a bug—it keeps us awake to possibilities we haven’t imagined yet.

In this world where AI agents adapt to individual working styles and randomness plays a role in every output, perhaps the real skill isn’t in standardizing our approaches but in learning to orchestrate diversity. Teams that can harness different tools, different models, and different workflows—finding harmony in that variation rather than enforcing uniformity—might discover capabilities that no single approach could achieve.

The shadow we’re building in isn’t darkness; it’s the space before dawn, where shapes are still forming and definitions remain fluid. Teams that can navigate by feel, stay aware of the shifting landscape, and remain genuinely curious about what they don’t yet know—these are the teams that will define what building with AI actually means.

In the end, the question isn’t whether we should build teams that use these tools. It’s whether we can build teams that are as adaptive, curious, and aware as the AI we’re learning to work alongside.

Randomness is a key indicator of adaptability and intelligence in both AI tools and the teams that use them. I really like this prescription: "holding our tools lightly, our processes flexibly, and our assumptions tentatively."